Combatting Overfitting — Drop Out Layer in Neural Network(Artificial Intelligence)

Overfitting is one of major challenges where model fails to generalize and usually give poor performance on unseen(test) dataset. There are various techniques available to prevent over-fitting i.e. reducing model complexity where you try to simplify you networks , weight regularization and adding a drop out layer.

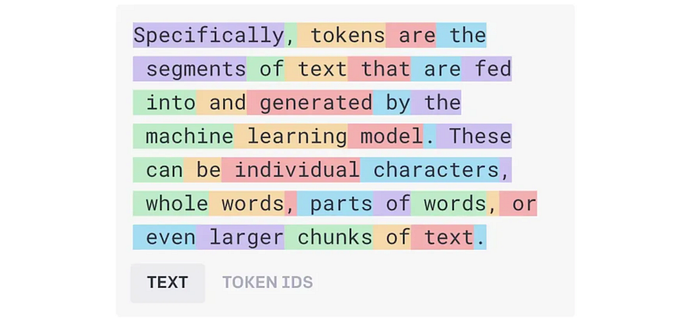

A dropout layer is a type of layer that can be added to neural networks. It is designed to improve the robustness of the model by randomly ‘dropping out’ a subset of neurons during the training process. This means that during each training iteration, a certain percentage of the neurons (and their connections) are temporarily removed from the network.

Essentially you add another layer in your neural network model where incoming input from previous layer are turned off / set to zero and then output is send to next layer. Adding Drop Out layer does not changes your dimension size

Below is sample code in Tensorflow Example.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout

model = Sequential([

Dense(64, activation='relu', input_shape=(input_shape,)),

Dropout(0.5),

Dense(num_classes, activation='softmax')

])In above example , we have set drop out to 0.5 which means after each step 50 % out neurons/node will be turned off but your dimension size would remain same i.e 64 in above example.

There is no fixed number for drop-out percentage , this needs to be experimented on your dataset and come up with a suitable number for your model.

Dropout is a powerful, yet simple technique in deep learning. It’s an essential tool for preventing overfitting and improving the generalization of neural networks. By randomly dropping neurons during training, it ensures that the network remains robust and less prone to the quirks of the training data.

Keep Learning and Keep Sharing..!!

Reference : https://jamesmccaffrey.wordpress.com/2018/05/11/neural-network-library-dropout-layers/